Summary:

What do Tinder, AirBnB, Pokemon Go, and Pinterest have in common? All of them run on the Kubernetes platform. This article explains why IT executives should be evaluating using this technology, how it can help businesses, and circumstances it should not be used in.

We will take a look at enterprise IT use cases in the real world, as well as some of the challenges this technology faces, and how to properly utilize Kubernetes to get the most from it.

What is Kubernetes Used for?

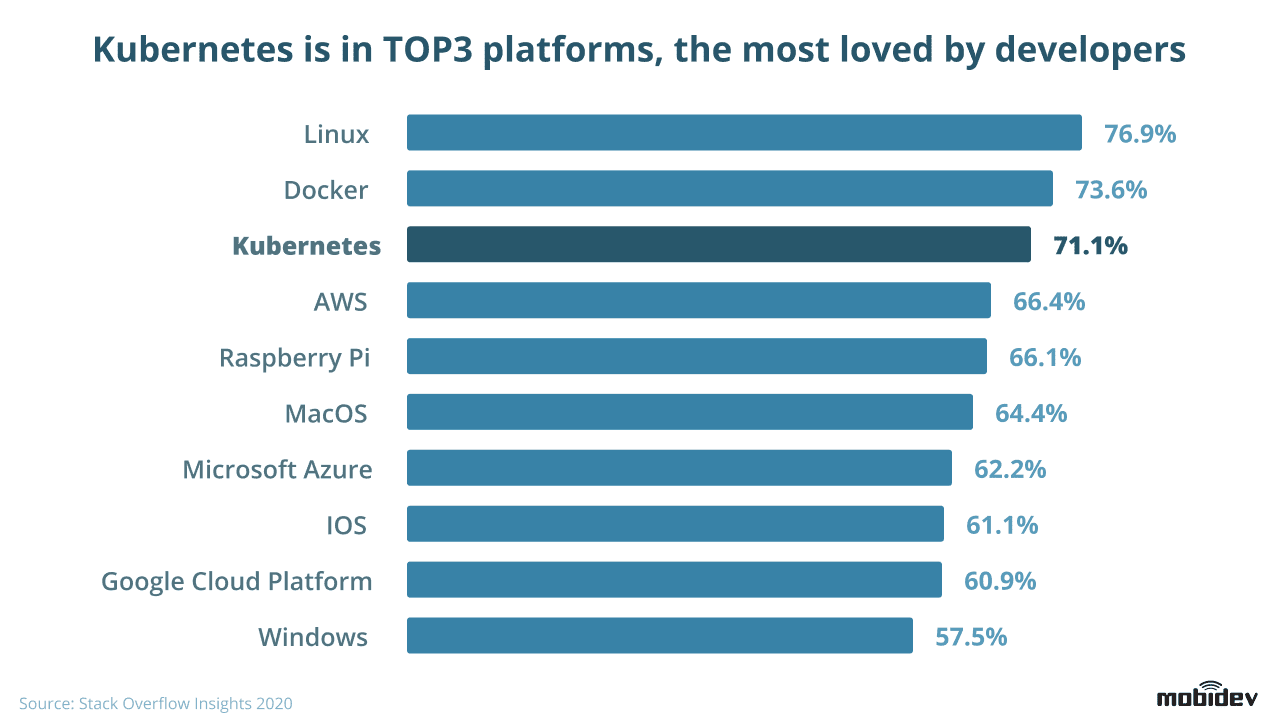

For those who keep abreast of the latest trends in web development, it may suddenly seem like Kubernetes is appearing all over, having become increasingly popular. First rolled out to developers in 2015, Kubernetes has gradually become a platform almost universally loved by developers.

But does Kubernetes make a similarly perfect fit for technology executives? And if so, why?

Kubernetes is an open source platform developed by Google – the search engine giant that made $85.014 billion from the U.S, allowing users to coordinate and run containerized applications over a series of multiple devices or machines. Kubernetes’ purpose is centered on total control of the entire lifecycle of a containerized application, with methods providing improved availability and scalability.

Kubernetes users are able to manage how their applications interact with other applications outside of the Kubernetes platform, and govern how and when these applications run.

Users can easily scale down or up as needs require, can roll out updates seamlessly, and can test features and troubleshoot difficult deployments by switching traffic between multiple versions of the applications.

What is the Difference Between Kubernetes and Docker?

Frequently, discussions about Kubernetes and Docker will take the form of – ‘Should I choose to use Kubernetes, or Docker?’ But this is a slightly misleading framing, as these two platforms are not strict alternatives. It’s absolutely possible to run Kubernetes without Docker, and vice versa. But Docker frequently can benefit from concurrent use of Kubernetes, and the same goes the other way.

Docker is all about containers, and is frequently used to help with the containerization of applications. Note that Docker is able to be installed on any machine as a stand-alone for running containerized apps.

The core of containerization is the concept of running an application in a way that isolates it completely from the other elements of the system. In essence, the application acts as though it had its own instance of the operating system, even though in most cases multiple containers are on the same OS. Docker acts as the manager for this process, handling the creation and running of these containers within a given OS.

Now, imagine you’re using Docker on multiple hosts. This is where Kubernetes can come into the picture. Each host, or node, can either be virtual machines or physical servers. Kubernetes is able to automate and manage elements like load balancing, scaling and security, and the provisioning of containers, linking all these hosts through a single dashboard or command line. We refer to a collection of Docker hosts (or any group of nodes) as a Kubernetes cluster.

The next logical question is: Why would it be necessary to create more than one host or node? There are two primary reasons for it.

- First, to make your applications scalable. If the workload on the application goes up, you’re able to create more containers, or else increase the number of nodes within the Kubernetes cluster.

- Second, to increase the robustness of the infrastructure. Even if any node or individual subset of nodes go offline, your application remains online.

Kubernetes is able to automate the removing, adding, managing and updating of containers. In fact, Kubernetes can perhaps best be thought of as a platform for orchestrating containers. Meanwhile, Docker allows for the creation and maintenance of containers to begin with, at a lower level.

Why and When to Use Kubernetes

Beginning with a series of Docker-created containers, Kubernetes manages the traffic and allocates resources for this service.

In doing so, it makes numerous aspects of managing a service-oriented application infrastructure simpler and easier. In conjunction with the latest CI/CD (continuous integration/continuous deployment) tools, Kubernetes can scale these applications without requiring a major engineering project.

We can take a look at a few of these Kubernetes use cases more closely.

1. Kubernetes for Container Orchestration

Containers, in a vacuum, are wonderful, providing a simple way to bundle and deploy your services with a lightweight creation process. This allows for positive attributes like efficient use of resources, immutability, and process isolation.

However, when you’re actually implementing your project in production, it’s very possible that you’ll end up dealing with anywhere from dozens to thousands of containers, especially over time. If this work is being done manually, it’s likely to require a dedicated container management team to update, connect, manage and deploy these containers.

Simply running the containers isn’t sufficient. You’ll also need to be able to accomplish the following:

- Scale either down or up in line with changes in demand

- Orchestrate and integrate various modular parts

- Communicate across clusters

- Ensure your containers are fault tolerant

It’s natural to think that containers should be able to handle all that without the need for a platform like Kubernetes. But the reality is that the containers themselves operate at a lower level of organization. In order to gain the significant benefits of a system built with containers, it’s necessary to use container orchestrator tools like Kubernetes, tools that sit on top of and manage containers.

2. Kubernetes for Microservices Management

The concept of microservices is far from a new idea. Software architects have worked at breaking up large-scale applications into broadly reusable components for decades. Microservices offer multiple benefits, from a greater degree of resiliency to faster, more flexible models for deployment to simpler automatic testing.

Microservices also let decision makers choose the best tool for any individual task. One piece of your application may benefit more from the productivity boost of a high level language like PHP, while another piece may get more from a high speed language like Go.

But how does Kubernetes fit in with this microservices concept? It’s true that breaking down your large-scale application into these smaller, less rigidly connected microservices will allow for more freedom and independence of action. But it’s still necessary for your team to coordinate while making use of the infrastructure all these independent pieces use to run.

It will be necessary to predict the amount of resources these pieces will need, as well as how your requirements shift under load. You’ll need to allocate partitions within your infrastructure for your various microservices and restrict resource use accordingly.

This is where Kubernetes is able to help, offering a common framework that gives a description for your infrastructure architecture that allows you to inspect and work through resource usage and sharing issues. If you’re planning on creating a microservice-reliant architecture, Kubernetes can be massively beneficial.

3. Kubernetes for Cloud Environment Management

It’s no wonder that containers and the tools that manage containers have become increasingly popular, as many modern businesses are shifting toward microservice-based models. These microservice models allow for easy splitting of an application into discrete pieces portioned off into containers run via separate cloud environments. This allows you to choose a host that perfectly suits your needs in each case.

Kubernetes is designed to be deployed anywhere, meaning you can use it on a private cloud, a public cloud, or a hybrid cloud. This allows you to connect with your users no matter where they’re located, with increased security as an added boon. Kubernetes allows you to sidestep the possible issue of ‘vendor lock-in’.

When Not to Use Kubernetes

While Kubernetes is powerful and flexible, it’s not the right tool for every use case or project. Keep in mind that Kubernetes was created to solve a certain set of potential issues and challenges. If you’re not actually facing those challenges, it’s very possible that Kubernetes will be more unwieldy than helpful.

If your project seems to be reaching a point where scaling and deployment necessitates its own dedicated resource, orchestration starts to become a viable choice.

The concepts of orchestration and automation tend to go hand and hand. Automation benefits businesses by increasing efficiency, allowing decreased human interaction with digital systems. With software handling these interactions instead, we see a reduction in errors and cost.

As a general rule, automation means automating one discrete task. In contrast, orchestration refers to automating an entire workflow or process consisting of multiple steps, and frequently multiple systems. First, you build automation into a workflow. Then, if the complexity grows to a certain point, orchestration takes over governing them and making them work in concert.

Kubernetes is not the best choice for:

- Simple or small-scale projects, as it is fairly expensive and too complicated.

- Projects that feature a tiny user base, low load, uncomplicated architecture, and no plans to increase any of that.

- Developing an MVP version, as it’s better to begin with something smaller and less complex, such as Docker Swarm. Then, after you’ve solidified a vision of how your app runs, you can think about switching to Kubernetes.

How is Security Implemented in Kubernetes

For systems run on Kubernetes, general web application security measures should be provided in order to protect against malicious attacks and accidental damage.

Download the Web Application Security Requirements Checklist to secure your web application from all angles.

Kubernetes Benefits and Advantages

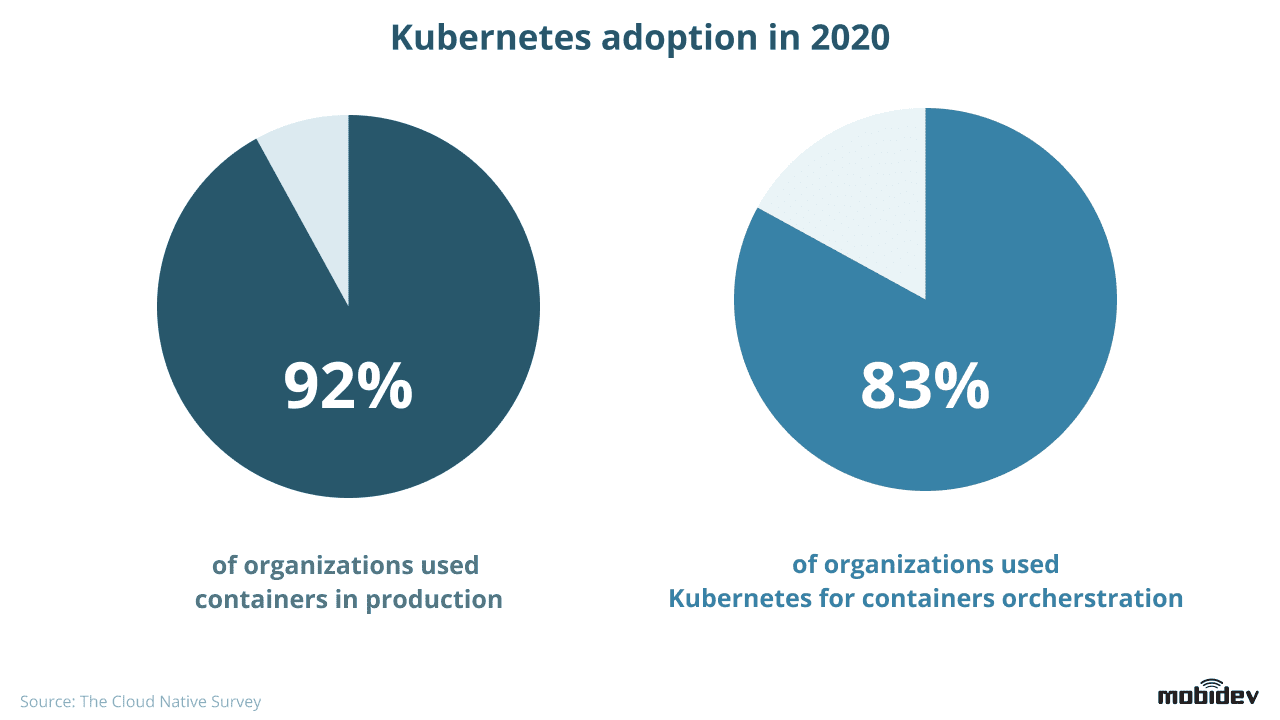

The Cloud Native Survey, which polls Technology/Software organizations, reports that the use of containers in productions has increased by 84% from the previous year, up to 92%. Use of Kubernetes is up 78% from the previous year, reaching 83%.

How are large companies using Kubernetes?

Pokemon Go worked on GKE (Google Container Engine) using Kubernetes, allowing it to orchestrate a container cluster that spans the entire globe. This let its team focus more efficiently on putting out live changes for their active player base of more than 20 million daily users.

Tinder made the transition from their legacy services to Kubernetes, a cluster made up of more than 1,000 nodes and 200 services, with 15,000 pods and 48,000 containers. This reduced waiting time for EC2 instances from multiple minutes down to seconds, delivering significant reductions in cost.

Kubernetes Use Cases

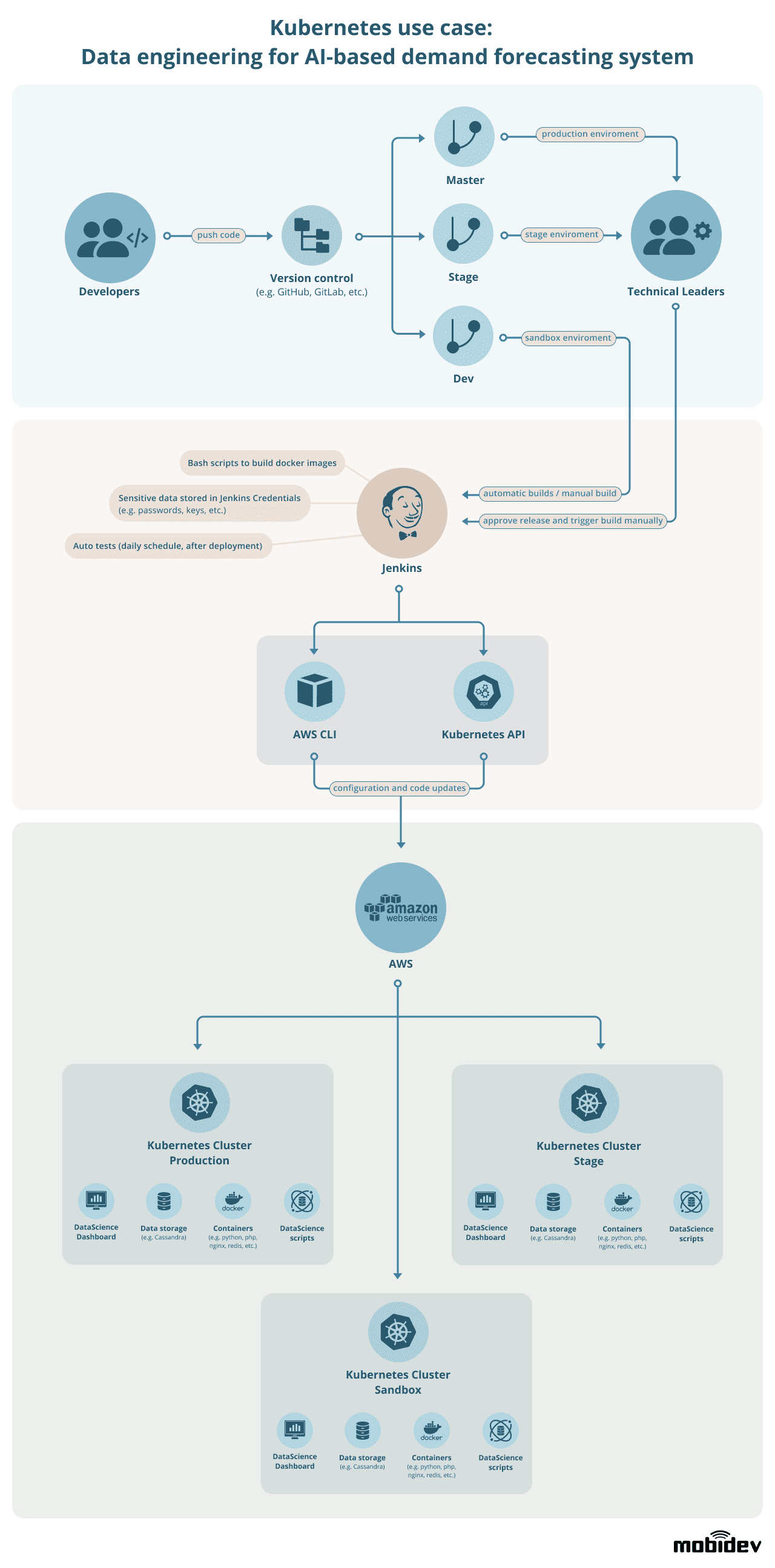

Case #1: Data engineering for AI-based demand forecasting system

Project description

MobiDev was developing a POS software & venue management system adopted by a lot of bars and restaurants. Over time, a huge amount of historical POS sales data accumulated, and MobiDev’s tech experts came up with an idea to apply artificial intelligence (AI) algorithms to the data, to find patterns in the previous sales and make predictions for the next period for each venue. An AI-based demand forecasting system was developed, as an independent module integrated into the system.

The problems to solve

As AI computations required significant resources, initially, virtual machines in the AWS EMR cloud service were used. The more venues that adopted the system, the more expensive the infrastructure became. The AI module’s high CPU load occurred overnight, when machine learning algorithms were processing the daily sales data, and were idle during the day. In order to decrease infrastructure costs, manual management of computing resources was implemented with the help of Docker Swarm.

At the MVP development stage it didn’t make sense to use Kubernetes as it would require a lot of research and adoption time. However, as the number of venues were supposed to grow, a new approach to data engineering was needed to provide automation and scalability along with cost optimization.

<!–

How Kubernetes was used

The diagram below shows the project CI/CD Pipeline, where Kubernetes is used as an Orchestrator for all computing resources based on Docker containers. There are 3 Kubernetes clusters and each environment (production, stage, sandbox) has a separate cluster. Kubernetes provides the ability to run all required workloads inside one cluster.–>

Tech tasks solved with Kubernetes

- Historical data gathering scripts on scheduled basis

- Data storage in database that runs inside Kubernetes

- AI scripts after successful historical data update

- API that interacts with AI dashboard

- AI dashboard which shows results of the AI scripts

Business tasks solved with Kubernetes

Kubernetes allowed us to implement auto-scaling and provided real-time computing resources optimization.

Performance and cost optimization

It was noticed that on Kubernetes, the AI scripts, which calculated the same logic for the same amount of data, returned results much faster than on AWS EMR, at the same time consuming less computing resources than EMR did. On Kubernetes, the average time required for running the AI module scripts decreased 10 times for the same number of venues, in comparison to the previous EMR production environment.

Reliability improvement

System stability was the key reason that made us switch from AWS EMR to Kubernetes. On EMR, script start failed sometimes for unknown reasons, and the logs didn’t give any helpful information.

Scalability improvement

While on Amazon EMR, the project development was limited by a maximum number of new venues to be added in the future. Kubernetes withdrew the limitation and automated scaling, which is critical for fast growing projects.

Project Summary

The system on Kubernetes provides results faster, consumes less computing resources, allows the client to reduce costs of the AWS billing and ensures stable and predictable product delivery.

Case #2: Data engineering for an AI video surveillance system

Another real-world business use case for Kubernetes MobiDev was involved in, is a smart computing resource auto-scaling for a face blurring feature in a video surveillance system. The system consists of the following applications: front end, back end, back-end queues, and an AI-based feature for face blurring. Kubernetes is used as the Orchestrator for all these applications.

When new video processing requests appear, back end auto-scales with the help of Kubernetes API, and automatically adds more workers to process the requests.

Kubernetes App Development

Kubernetes’ distributed architecture and scalability pair well with Machine Learning and Artificial Intelligence. As these technologies continue to mature, 2022 is the year to watch for growth in this space.

Enterprises should keep in mind that tools support business goals, and are not an end to themselves. 2020 was a year in which nearly every business was forced to reckon with unexpected changes. Kubernetes has the capability to accelerate web development through solutions built with a cloud-native ecosystem, while at the same time allowing for malleable use of applications and data, so that a company can succeed through the modernization of its platforms and apps.

Learn more about web application development services we provide or contact us to discuss your project.