Having been involved in AR development since the launch of ARKit in 2017, I have learned that building AR projects is more difficult than it seems at first glance. This is especially true if you want to create more than just a simple AR feature for fun, but a serious multi-functional product.

Over the years at MobiDev, I’ve had experience working on projects outside of just the entertainment industry, including work done in the fields of construction, retail, education, manufacturing, real estate, and more. This guide covers the development of such projects that require not just animations or graphics, but the design of complex systems for truly innovative solutions.

Technologies Used to Develop Augmented Reality

Technologies used in augmented reality app development can depend on a number of factors, like the type of hardware being used, the available power of the device, and what application AR is being used for. Each technology has its capabilities and limitations.

Mobile Augmented Reality Platforms

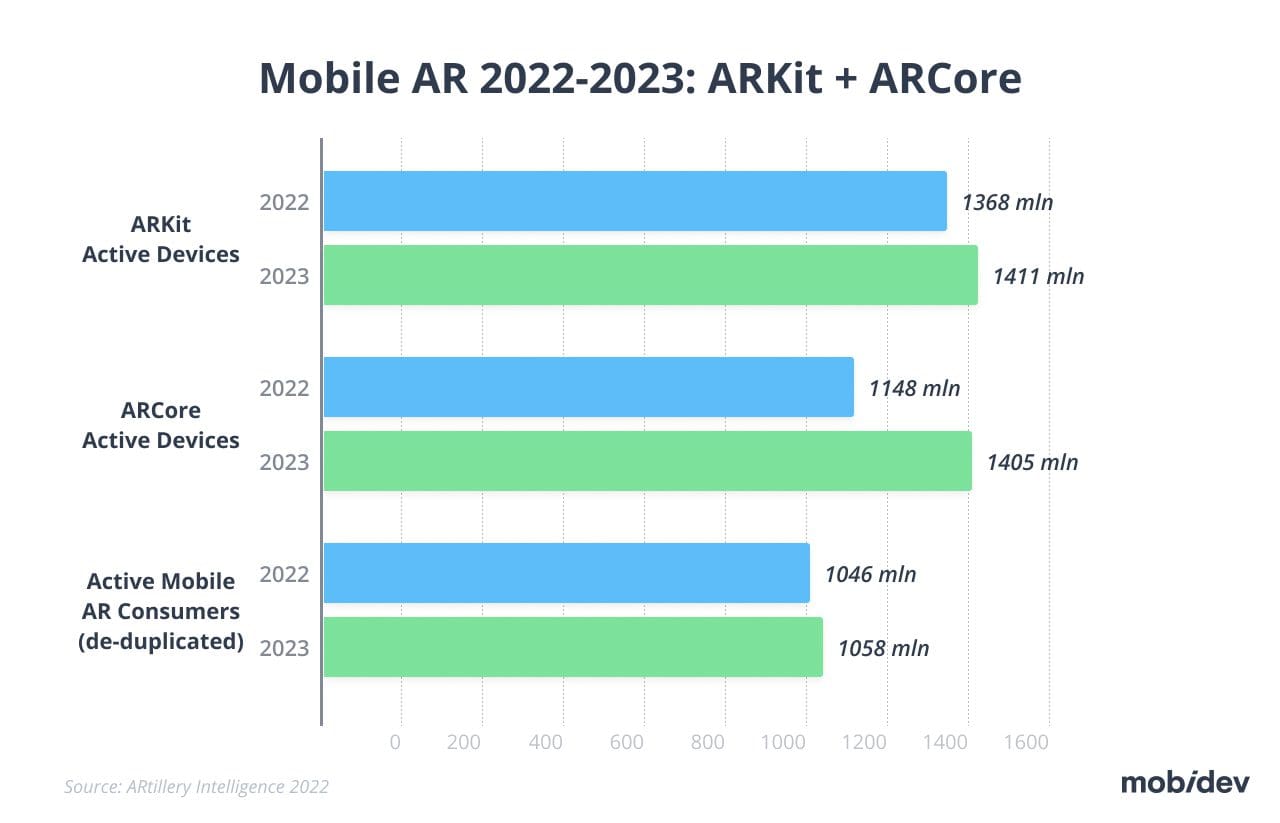

Smartphones have unique advantages compared to other AR platforms. They’re prevalent in the market and are extremely portable. This makes AR more accessible to many consumers since bulky headsets and elegant smart glasses haven’t quite hit the mainstream just yet. Because of this, mobile AR is a prime target for business applications.

Although mobile AR may not be the most powerful or immersive, it certainly has the potential to be very profitable and is one of the most important augmented reality trends to keep track of. Try-on solutions that allow you to test cosmetics or clothes before buying, AR avatars and filters available to users on a smartphone can help businesses communicate with customers even in a virtual environment.

Learn more about

MobiDev AR Development Services to see how we can meet your needsThere are three approaches toward mobile AR that businesses need to choose from:

- Native Android AR applications with ARCore

- Native iOS AR applications with ARKit

- Cross-platform apps

Native augmented reality app development allows developers to take advantage of the power of a device. In turn, a cross-platform application may not be able to take advantage of powerful native features but it can minimize the development time. Creating the same app with native code on each platform will be more expensive, but if more power and features are required, it may be more effective in the long run. If the application is fairly simple, cross-platform code may be enough.

Despite Android dominating the global OS market, developers on GitHub have historically seemed to prefer ARKit based on the number of repositories over the past several years.

| Year | ARKit Repository Results | ARCore Repository Results |

| 2021 | 4,504 | 1,874 |

| 2022 | 4,962 | 2,204 |

| 2023 | 5,210 | 2,355 |

AR Development For iOS Devices: ARKit

Let’s dive into the capabilities of ARKit for developing augmented reality applications and how they can be applied in different projects.

Location Anchors

Location anchors allow developers to affix virtual objects in the real world by using geographic coordinates. For example, location anchors could display a three-dimensional icon or text in space next to an iconic building. Since location anchors are dependent on Apple Maps data, this means that if the city is not supported by Apple Maps, functionality for location anchors may be limited.

One of the most groundbreaking elements of location anchors is how location is approximated. GPS is simply not precise enough to provide location anchors on a user’s screen. To assist, developers can look around with their camera to allow the device to scan for features on buildings around them. By using these architectural features in conjunction with Apple Maps Look Around data, a user’s location can be better approximated for location anchors.

This feature is extremely useful for building AR outdoor navigation solutions as well as AR apps for tourism and retail.

LiDAR and DEPTH API

Depth API is another valuable feature of ARKit. It utilizes one of the most powerful hardware features for AR on a mobile device, the LiDAR scanner on the iPad Pro and Phone 12 Pro, 12 Pro Max, 13 Pro, 13 Pro Max and 14 Pro smartphones. This enables much better analysis of scenes and allows for real-world objects to occlude virtual objects with much better accuracy.

LiDAR is used not only for augmented reality projects but for other areas where surface scanning is required. Lidar allows you to more accurately recognize surfaces both vertically and horizontally, and scan complex surfaces. However, its capabilities aren’t limitless. For example, it cannot scan behind objects, it only scans in a straight line, and to scan an object from all sides, you have to go around it. LiDAR can also work incorrectly with highly reflective shiny surfaces like glass or a mirror.

Android devices have an analog of LiDAR – ToF sensor, but on a very small number of devices.

Raycasting API

Apple’s Raycasting API enables enhanced object placement in conjunction with depth data. This allows much more accurate placement of virtual objects on a variety of surfaces while taking into account their curvature and angle. For example, it’s possible to use this to place an object on the side of a wall or along the curves of a sofa rather than simply flat on the floor.

ARKit Updates

In recent years, ARKit brought several new features to improve AR experiences on iOS devices. These include better motion capture, improvements to camera access, and additional locations for Location Anchors. They also included Plane Anchors, a feature that allows tracking flat surfaces like tables. This makes it easier for those surfaces to be moved without disrupting the AR experience.

RoomPlan

A relatively new, cool feature of ARKit is the RoomPlan framework. RoomPlan allows you to get a 3D plan of a room in a matter of seconds using the iPad LiDAR scanner and AI processing of the received data. The quality of the output depends on the quality of the sensor and the type of surface. RoomPlan significantly accelerates and shortens the development of solutions such as AR measurement applications.

With RoomPlan you can output room scans in USD or USDZ file formats. These include the dimensions of the room and the items contained in the room, such as furniture. These file formats can then be imported into other software for further processing and development.

Case Study:

AR Solution for Home RenovationAs part of our ARKit research here at MobiDev, we tested using ARKit for gaze tracking. This opens up a number of possibilities for iOS, such as eye-based gestures, vision-based website heatmap analytics, and preventing distracted or drowsy driving.

This is just one of many cases from our portfolio. You can check out more of our AR demos here.

AR Development For Android Devices: ARCore

In an effort to stay competitive, Google has pushed ARCore far to remain one of the most versatile AR development platforms in the world. Let’s explore some of the features that are used by developers.

Cloud Anchors

This tool allows users to place virtual objects in physical space that can be viewed by other users on their own devices. Google has made sure that Cloud Anchors can be seen by users on iOS devices as well.

On the Android side, ARCore is introducing new features to match ARKit’s advances. ARCore v1.33.0 introduces new Cloud Anchors endpoints and terrain anchors. These both improve the geographic anchoring of virtual objects. Google also introduced ARCore Geospatial API, which, similar to ARKit Location Anchors, utilizes data from mapping databases. In this case, Google Earth and Street View image data is used to identify the user’s geographic location and display virtual objects in those locations accurately.

The following MobiDev demo demonstrates how ARCore object detection features for a virtual user manual work.

AR Faces

ARCore enables developers to work with high-quality facial renderings by generating a 468-point 3D model. Masks and filters can be applied after the user’s face is identified. This is one of the most popular use cases for AR app features. AR faces help in building solutions like virtual cosmetics and accessory try-ons for eCommerce.

AR Images

AR business cards and advertising posters are just a few of the applications that are possible with augmented reality images. 2D markers can be used to implement these features, as well as more advanced solutions like AR indoor navigation.

We have tested ARCore with indoor navigation solutions at MobiDev. Over the past several years, applications like indoor positioning have gotten even easier since our first experiments, making this technology more feasible for use in the real world. Check out this video to learn more about it.

Whichever platform you develop AR apps for, remember to test for mobile accessibility to ensure that a wider range of people can use your solution with a satisfactory experience.

ARKit vs ARCore Features Comparison

These two frameworks for Android and iOS respectively, are nearly identical when viewed from a features perspective. However, the real difference between these two devices is hardware consistency. Apple iPhone and iPad devices are largely more consistent when it comes to the behavior and capability of their hardware.

Meanwhile, Android devices are built by a number of different manufacturers with different specifications. That is, they have greater fragmentation, and besides, not all models support AR. Because of this, it becomes more difficult to deliver a consistent experience across many different Android devices. In our experience, customers prefer ARKit development with the prospect of expanding to Android in the future.

Given the limitations of Android hardware, it’s important to keep in mind how powerful your AR experience will be and what devices it should be running on. Should it primarily be running on the latest and greatest Samsung and Pixel devices with high-performance AR features, or should it be less intensive to run on more devices? The choice is yours, but experienced AR developers are always there to help you find the best solution.

Cross-Platform Mobile AR Development in Unity

If utilizing the full power of native AR frameworks on Android and iOS isn’t necessary and if your goal is faster time to market, cross-platform AR development in Unity may be a good option. Unity AR Foundation is a helpful framework for cross-platform augmented reality app development. It supports Plane detection, Anchors, Light estimation, 2D image tracking, 3D object tracking, body tracking, Occlusion, and more.

However, there are some missing features depending on the platform you’re on. For example, Unity AR Foundation has limitations with some features for ARCore at the moment, such as 3D object tracking, meshing, 2D & 3D body tracking, collaborative participants, and human segmentation. If you want your app to be cross-platform, you’ll have to keep these missing features in mind. In our experience, the best practice for developing AR solutions is to create native applications. This allows you to provide a high-quality user experience and avoid potential pitfalls.

Web-based Augmented Reality Technologies

On one hand, web AR is an extremely accessible technology because it can run on a multitude of devices without installing any additional software. On the other hand, web AR is very limited in features and power.

Some businesses are already utilizing web AR for technologies like virtual try-on solutions. For example, Maybelline, L’Oréal, and other companies have the option for users to virtually try on cosmetic products using their front-facing camera and web AR software.

Web AR is best implemented for simple tasks like facial recognition filters, changing the appearance or color of an object in a scene like the color of hair, replacing backgrounds for video conferencing, and more. However, it cannot provide a high level of accuracy and performance for more complex projects. It’s important to remember these limitations when deciding what platform your application should run on.

Augmented Reality Development For AR Wearables

When discussing wearable technology for augmented reality, we typically refer to gear like Microsoft HoloLens and more portable and comfortable glasses like Google Glass.

From a software engineering standpoint, Microsoft Hololence’s development is based on the Microsoft technology stack and Azure Cloud.

Check our demo that showcases the capabilities of the Microsoft HoloLens for product presentation and educational purposes.

As for AR Glasses, most of the hardware is Android-based, and manufacturers provide SDKs for engineers to create apps. There are still some substantial aspects to be considered. The first is User Experience and User Interface, as the pattern of using such software is entirely different from what we are used to with smartphones. Then, engineers must be capable of building lightweight and optimized apps, as energy consumption is still the main pain point for many devices.

AI Comes to the Rescue When AR is Not Enough

Augmented reality is an amazing technology, but sometimes the capabilities of ready-made AR frameworks are not enough, especially if we are talking about complex innovative solutions. This is where artificial intelligence algorithms come to the rescue.

AI is used in one way or another under the hood of many features of AR tools. For example, ARKit’s RoomPlan applies machine learning algorithms for object detection and scene understanding. But if ready-made features still cannot provide the desired result, there is always a chance that the implementation of custom AI can change it for your project.

What AI can provide for AR projects:

- improved object detection, object recognition, and image tracking

- collecting more data about the environment for an improved AR experience

- improved accuracy of measuring distance and objects

For example, standard AR libraries have limited capabilities to create a solution like a virtual fitting room or virtual try-on (poor identification of body parts, rendering issues, etc.). Therefore, this requires the development of a custom human pose estimation model that could detect any key points in real time if the processed image contains only part of the body.

Check out our Wi-Fi router diagnostics demo as an example of using a combination of AI and AR technologies.

As MobiDev has in-house AI labs, our expertise allows us to cover such complex projects that require a combination of AR and AI. We can use ready-made artificial intelligence tools or create custom models that will allow you to bring your innovative solution to life.

What You Should Know Before Starting an AR Project: Challenges and Best Practices

Now that we’ve covered the core technologies that enable AR experiences across multiple devices, let me share with you some things to consider when creating effective augmented reality applications. These include challenges your development team may face and ways to overcome them based on MobiDev’s experience.

1. Hardware limitations

AR frameworks are not the only place you might run into limitations. The capabilities of the devices your app runs on can limit your project as well. These limits can include processing power, memory, camera quality, and more. Optimize your app to work within these constraints to ensure a smooth and efficient experience for your users.

You might need to reduce the complexity of 3D models, use techniques to avoid rendering objects that are not visible to the camera, minimize background processes, and etc. The specific list of solutions depends on the requirements and features of your project, as well as the experience of the developers working on it.

Hardware limitations are one of the key things that affect app performance. Since AR applications require the rendering of 3D graphics in real-time, this can be a difficult task for some devices. That’s why it’s important to implement efficient algorithms to maintain smooth frame rates and low latency, optimize rendering pipelines by prioritizing critical elements of the AR experience, and reduce polygon counts.

2. Tracking Accuracy

Tracking quality is critical to the quality of the AR experience delivered. Achieving reliable tracking and calibration can be a challenge, especially in dynamic environments or when you’re dealing with objects of varying size, shape, or texture.

Different techniques can be used to help you overcome this challenge. For example, using visual markers placed in the environment allows the app to trigger AR more accurately. This is currently one of the best approaches for providing indoor AR navigation, as the app can precisely track and align virtual objects to placed markers. The application of machine learning and computer vision can also help improve tracking accuracy.

3. Testing AR Applications

Ensuring compatibility and consistent performance across different devices, environment conditions, and software versions is a crucial task for AR developers. But testing AR applications can be challenging, as we have to test the app not only on different devices, but with different physical objects, in different scenes, and under different lighting. Augmented reality projects therefore require special testing strategies and quality control specialists who have experience working with this type of software product.

Also, it’s important to keep your AR application updated and compatible with the latest device and operating system versions after release.

How MobiDev Can Help You

Our team of augmented reality app developers is no strangers to challenges. MobiDev has experience with developing applications for a number of different solutions across various industries.

Our augmented reality developers know how to overcome the limits of existing AR frameworks to create more effective solutions. Combining AR with advanced artificial intelligence and machine learning algorithms can help you provide more accurate and realistic AR experiences, improve app performance and increase customer satisfaction.

If you’re ready to start a project, feel free to reach out to us so we can talk about how we can achieve your vision.